Duplicate content can seriously harm your site, so we’ve put together our favorite free duplicate content checkers or plagiarism checking tools for your use.

Why You Should Take Advantage Of Duplicate Content Checkers

Search engines want to provide valuable, original content, so they regard plagiarism as a threat to their users’ experience. When a search engine indexes a web page, it scans the page’s content and then compares the content with other indexed websites. If a page is found to have plagiarized content, search engines often will penalize the page by lowering its rankings or removing it entirely from search results. Considering the serious penalties that your site can be landed with if it has plagiarized content, it is highly advisable that you check your existing web content, and any content you plan on publishing, for duplication.

The Best Free Plagiarism Checker Tools For Your Web Content

Even if you are confident your website’s content wasn’t plagiarized, it’s recommended you check to make sure nothing was unintentionally duplicated. To help you complete this task (and ensure that your site’s rankings stay healthy and unpenalized) here are our favorite 4 free duplicate content checker tools:

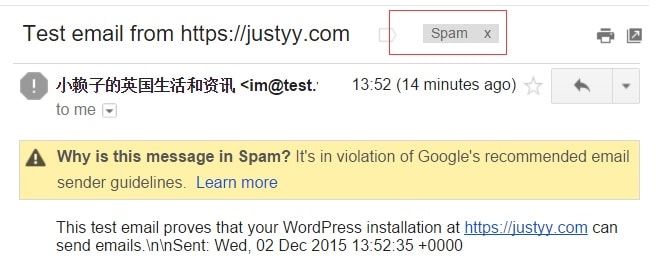

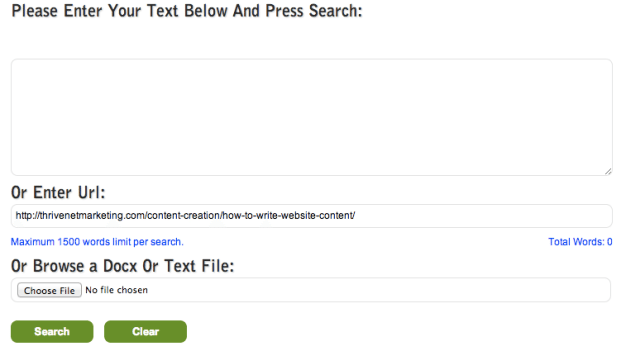

This free plagiarism checker tool allows you to conduct text searches, DocX or Text file, and URL searches. It’s free with unlimited searches when you register (you’re allowed 1 free search before signing up). Scan for duplication was completed in just a few seconds (of course this will depend on the length of the text, page, or file you’re scanning). It’s simple, free, and effective!

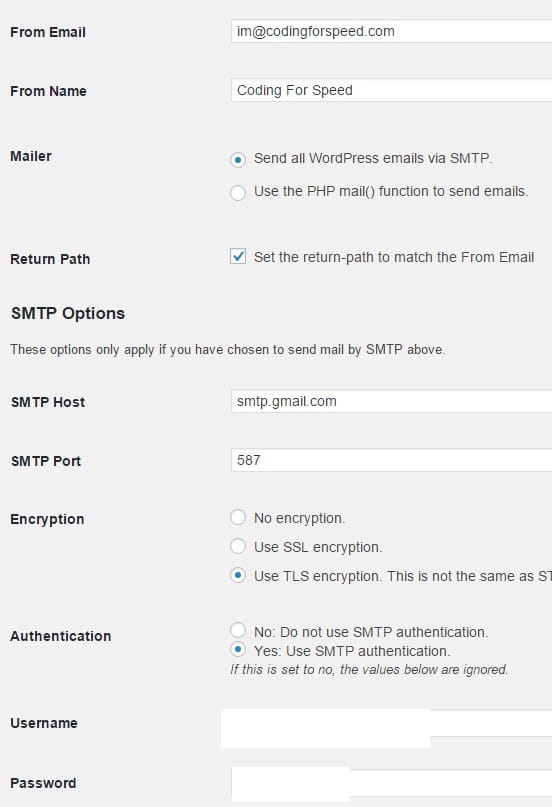

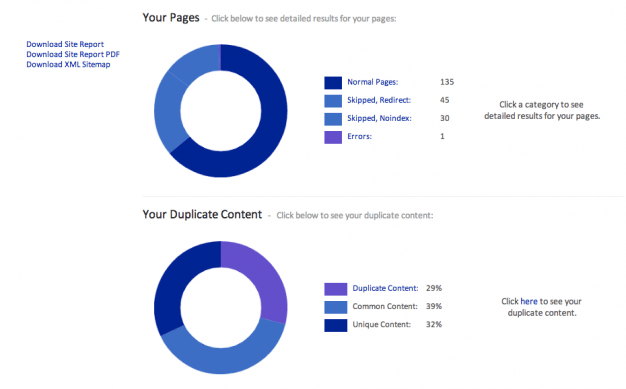

For checking entire websites for duplicate content, there is SiteLiner. Simply paste your site’s URL in the box and it will scan for duplicate content, page load time, the number of words per page, internal and external links, and much more. Depending on the size of your site the scan can take a few minutes, but the results are well worth the wait. Once the scan is complete you can click on the results for greater details and even download a report of the scan as a PDF.

Note: The free SiteLiner service is limited to one scan, per site, per month, but the SiteLiner premium service is very affordable (each page scanned only costs 1c, and you can scan as often as you wish).

The PlagSpotter URL search is free, quick, and thorough. Scanning a web page for duplicate content took just under a minute with 49 sources listed, including links to those sources for further review. There is also an “Originality” feature that allows you to compare text that has been flagged as duplicated. While PlagSpotter’s URL search is free, you can sign up for their no-cost 7-day trial to enjoy a plethora of useful features, including plagiarism monitoring, unlimited searches, batch searches, full site scans, and much more. If you wish to continue using PlagSpotter after the free trial, the paid version is extremely affordable.

CopyScape offers a free URL search, with results coming in just a few seconds. While the free version doesn’t do deep searches (breaking down the text in order to search for partial duplication) it does a thorough job of finding exact matches. If you have found two URLs or text blocks that appear similar, Copyscape has a free comparison tool that will highlight duplicate content in the text. While there is a limited number of searches per site with their free service, CopyScape’s Premium (paid) account allows you to have unlimited searches, deep searches, search text excerpts, search full sites, and monthly monitoring of plagiarism.

Notable Copy Checking Mentions:

Update! When we originally wrote this in 2014, there were very few plagiarism or duplicate content checking tools on the market. The list has expanded dramatically, and now include many new options, including the following honorable mentions:

Now you know our duplicate content tool recommendations – have any of your own?

We hope that the resources we’ve listed above will help you

write quality web content without worrying that your website or blog will be penalized for duplicate content. If you’ve already been using a duplicate content checker for your website or blog, we’d love for you to share your own recommendations or experience in the comments below. If you’d like to learn more about

content writing and how it can benefit your site,

contact us and we’ll help you devise an effective strategy for your site.